Introduction

An ETL (Extract, Transform, Load) process is a data integration process commonly used in modern data warehousing and business intelligence initiatives. The ETL process involves extracting data from one or more source systems, transforming that data into a format suitable for analysis, and loading the transformed data into a target system, such as a data warehouse or a data lake. However, it is essential to note that the data lake is often the beginning of its ETL process in many cases. A data lake is designed to be data agnostic, meaning it usually stores raw, unstructured, and structured data equally well.

Suppose a data lake is used as a destination in ETL. In that case, it typically just means that the data is being loaded after transformation into the data lake, only to be extracted again by another application for immediate use (for example, as a repository for data analytics software).

The “Extract” phase of the ETL process involves extracting data from source systems such as data lakes, databases, spreadsheets, IoT devices, or other data repositories. This may be structured or unstructured data and may be in different formats. Quite often, the extract phase involves raw data, or at the very least, unstructured data, unusable by most applications in the current form.

The “Transform” phase involves cleaning and transforming the extracted data from the source systems to ensure an accurate, complete, and consistent format. This phase may include filtering, removing duplicates, enriching, removing redundant data, converting data types, and aggregating data. The goal is to take the data raw, unstructured, or simply in a format different than required for the target system and make it usable.

The “Load” phase involves loading the transformed data into a central database, such as a data warehouse or cloud repository, where users can easily access and leverage it for data analysis, data processing, or other purposes. This phase may involve validating the data, mapping it to the appropriate schema, and writing it to the target system.

Let’s look at ETL tools that can help you move data between systems.

Data Importer

Flatirons Fuse a data importer that handles the “transform” and “load” parts of the ETL process. With Flatirons Fuse, you can bring tabular data (e.g., CSV or spreadsheet data) from any source. Using the Fuse data importer to perform data transformations and validations to ensure data quality ultimately loads data into a new system.

Flatirons Fuse touts itself as the “Intelligent CSV Import Tool for Websites.” The data importer can be installed directly on third-party websites or web applications so customers or operations teams can use it to bring external data into your website.

Apache NiFi

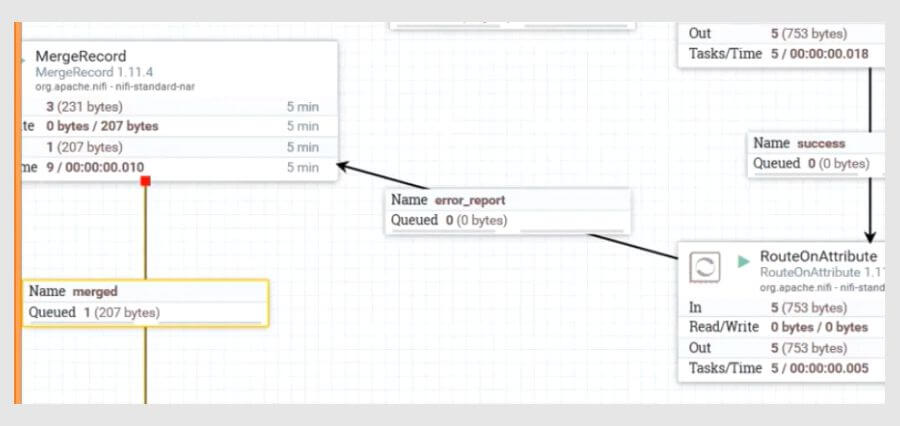

An open-source data integration tool that provides a web-based interface for visually designing, managing, and monitoring data flows. It supports many data sources and destinations, including Hadoop, Kafka, databases, data warehouses, and cloud services. It can be used very effectively to manage data pipelines, but within that functionality, it can also serve as one of the best open-source ETL tools.

With a little effort and configuration, you can automate the extraction of structured and unstructured data from multiple data sources, for example, IoT devices or cloud data. It then can perform fundamental data transformations via its Flowfile Processors and transmit that data to a central repository such as a data lake or data warehouse.

Apache NiFi can serve as an edge processor, as its headless (command-line) version has a tiny footprint, performing minor data processing tasks on-location in satellite offices, or distributed IOT devices, transforming data on-site and easing the processing burden at the target system or schedule and manage data streams from low bandwidth or intermittent connectivity locations.

At the central location, Nifi can direct incoming data traffic to multiple targets, creating and managing complex data pipelines. It is a tool with many potential use cases, ranging from cloud orchestration and data pipeline management to the simplest ETL or ELT pipeline.

Because it is open-source, it can be leveraged for free. And because it is free, and ETL is probably the least of its functionality, it can be a strong candidate for use as your ETL software. However, you must bring your own (or your team’s) data engineering expertise and take the time and effort to learn the technology.

Talend

A comprehensive data integration platform that includes a wide range of tools for data integration, data quality, and master data management. It supports various data sources and destinations and provides a user-friendly graphical interface for designing data integration workflows. The extract, transform, and load process is one of the most straightforward workflows Talend can assist with. It is routinely used to extract data from multiple sources, whether structured or unstructured, on-premise or in the cloud, in batches, or in real-time.

It can also handle basic or advanced data transformation, leveraging Apache Spark to improve data quality, then load the data to a cloud data warehouse, data lake, or destination of choice. Talend is often used for data analytics, managing the flow of data, and transforming it so that it is in the correct format to load into a business intelligence solution to analyze the data.

Talend offers similar functionality to Apache Nifi in that both allow visual representation of complex data flows and are designed to manage pipelines and orchestrate data. However, Talend offers tailored solutions for multiple industries and professional support to build and maintain custom solutions. With open-source tools like NiFi, results depend entirely on your expertise.

Informatica

A widely-used data integration service that provides various data integration solutions, including data integration, data quality, and data governance. It supports multiple data sources and destinations and offers a range of features for data mapping, transformation, and validation. As with Talend and Apache, it does far more than a simple ETL process. It can be used effectively for that purpose and will likely utilize that core functionality within a more extensive data integration workflow.

For example, ETL might extract structured and unstructured data from multiple sources and transform it before loading it into a data lake. A separate flow might treat that data lake as a source and extract and transform the data again for integration in a cloud data warehouse. However, Informatica prides itself primarily on data integration, billing itself as an iPaaS or Integration Platform as a Service. Its primary focus is its ability to integrate with multiple APIs or apps, including SaaS applications.

Microsoft SQL Server Integration Services (SSIS)

A popular ETL tool that comes bundled with Microsoft SQL Server. It provides a range of features for data integration, including data extraction, transformation, and loading. It supports many data sources, including XML files, flat files (like CSV), and relational database files. It provides a user-friendly graphical interface for designing data integration workflows, transforms data via transformation custom properties, and can direct this data to numerous potential destinations. Loading data is the final stage of the ETL process but not necessarily the last step that SSIS handles.

Summary

ETL (Extract, Transform, Load) is a data integration process used in modern data warehousing and business intelligence initiatives. The typical ETL process involves extracting data from legacy systems or other source systems, transforming the data into a format suitable for analysis, and loading the transformed data into a target system such as a data warehouse or data lake. As you can see in the examples above, many ETL tools are available on the market, some of which are not necessarily billed directly as ETL tools.

Flatirons Fuse a data importer and handle a vital portion of the ETL process, transformation, and data loading. Apache NiFi, Talend, Informatica, and Microsoft SQL Server Integration Services (SSIS) are billed as data pipeline tools or integration tools rather than ETL software but provide high-quality features perfect for ETL processes as part of their core functionality.

It is critical to remember that ETL is often not an end unto itself but part of a larger picture, usually a piece of a more comprehensive solution. Each ETL tool provides its features for data extraction, transformation, validation, and loading. It may be a combination of tools that offer the correct cost-to-benefit ratio for your particular use case. Choosing the best ETL tools for your project depends on your specific requirements.

| Click Here For More News and Blog |